Edmund West explores the history of the Deaf community’s use of technology to communicate with the hearing world

It may soon be possible for the deaf to sign at a webcam and immediately see their gestures become words on a screen, like a deaf version of voice to text.

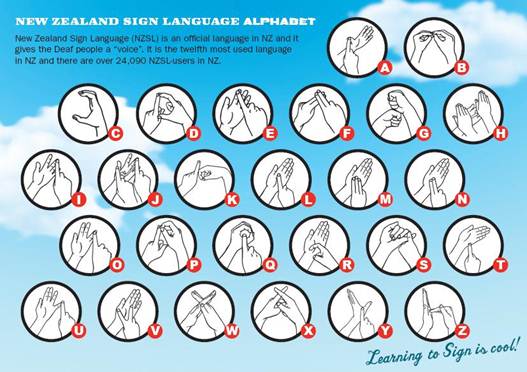

For those born deaf, life can be very lonely. Speech does not come naturally to you if you’ve never heard the sound of your own voice, and neither does lip reading. What does come naturally to the deaf is sign language. A group of deaf children who have never been taught to sign will make up their own. Sign is the basis of deaf culture.

In deaf education, there has always been a debate between oralists (who believe they should be taught with lip reading) and manualists (those who believe they should be taught with sign language). Signing was virtually banned at the 1880 Milan congress. This is despite the fact that the deaf do as well as everyone else when taught with sign. When they are orally taught, they fall further and further behind because they have to spend nearly half their education just learning lip reading Making Waves

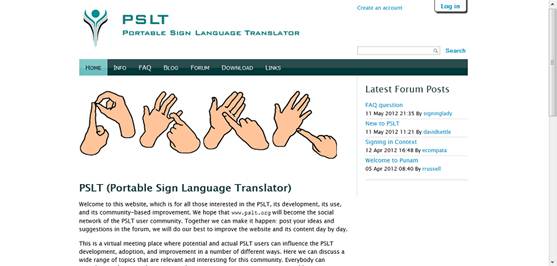

Webste: pslt.org

The Portable Sign Language Translator (PSLT) app (www.pslt.org) has been created by Technabling, a company of less than ten people. Technabling uses software to assist in the day-to-day living of vulnerable people.

The company was founded by Dr Ernesto Compatangelo, a lecturer in computing science at the University of Aberdeen. He explained, “The aim of the technology is to empower sign language users by enabling them to overcome the communication challenges they can experience, through portable technology. Their signs are immediately translated into text, which can be read by the person they are conversing with. The intention is to develop an app that is easily accessible and could be used on different devices. One of the most innovative and exciting aspects of the technology is that it allows sign language users to actually develop their own signs for concepts and terms they need to have in their vocabulary.”

This last feature is vital, because some signers have other problems with hand to eye coordination. For example, they may not be able to sign in the normal way.

The app has been prototyped for the Making Waves competition, part of the government’s Small Business Research Initiative (SBRI).

The software is able to convert the gestures into a video stream, match this to its library of signs and create sentences in real time. It can work on smartphones, tablets and laptops running Android, Linux or Windows.

What if one sign has more than two meanings? On the PSLT forum, Dr Compatangelo acknowledged the problem: “The BSL signs for ‘ball’ and ‘world’ are the very same… we have equipped the PSLT with a natural language disambiguation mechanism. For instance, if you sign something like ‘I kick the ball/world’ the PSLT will decide that what you are likely to mean is ‘I kick the ball’ rather than ‘I kick the world’. Of course, the angry person who really means that s/he wants to ‘kick the word’, in a figurative way, I suppose, will either have to rephrase the sentence or to provide a further sentence for clarification. Again, the very same happens when talking: if I say that I want to burn a log, does it mean that I want to burn a piece of wood or just a list of all my access records?”

Dr Ernesto Compatangelo will be speaking in Glasgow on 8th June for the Joint Information Systems Committee Regional Support Centre’s annual conference ‘Here Be Dragons’ (on Twitter, #iDragon).

Other companies in the Making Waves competition are Hassell Inclusion, Gamelab UK and Reflex Arc. Together they have created uKinect, which uses Microsoft’s motion tracker for the same purpose as the PSLT except it converts Makaton to text.

The software is still in Phase 1. Jonathan Hassell, head of Hassell Inclusion said, “We’d like to invite colleges, training organisations and employers that work with people with learning difficulties, people on the autistic spectrum, and people who have communication difficulties after suffering a stroke, to get in touch with us if they’d like to help us shape the future of our innovative product.”

In Phase 2, Gamelab will use its share of the funding to build new apps. These include the following: an app designed for autistic people, being able to track progress in learning signs, video demonstrations of unfamiliar signs, educational games of varying difficulty and an app for creating sign dictionaries quickly and cheaply.

In the future, it plans to convert signs to sounds and vice versa. On 4th April, it set up an online forum where users can exchange comments and sign dictionaries for free. Technabling will get $240,000 (of a total $800,000) of SBRI funds for Phase 2, where it plans to include the whole BSL vocabulary and Makaton. It will also add background noise removal and an avatar that will convert your typing into signs.

What if one sign has more than two meanings? On the PSLT forum, Dr Compatangelo acknowledged the problem: “The BSL signs for ‘ball’ and ‘world’ are the very same… we have equipped the PSLT with a natural language disambiguation mechanism. For instance, if you sign something like ‘I kick the ball/world’ the PSLT will decide that what you are likely to mean is ‘I kick the ball’ rather than ‘I kick the world’. Of course, the angry person who really means that s/he wants to ‘kick the word’, in a figurative way, I suppose, will either have to rephrase the sentence or to provide a further sentence for clarification. Again, the very same happens when talking: if I say that I want to burn a log, does it mean that I want to burn a piece of wood or just a list of all my access records?”

Dr Ernesto Compatangelo will be speaking in Glasgow on 8th June for the Joint Information Systems Committee Regional Support Centre’s annual conference ‘Here Be Dragons’ (on Twitter, #iDragon).

uKinect is an innovative tool which uses Microsoft’s Kinect technology to recognise the Makaton sign language used by people with learning difficulties

Other companies in the Making Waves competition are Hassell Inclusion, Gamelab UK and Reflex Arc. Together they have created uKinect, which uses Microsoft’s motion tracker for the same purpose as the PSLT except it converts Makaton to text.

The software is still in Phase 1. Jonathan Hassell, head of Hassell Inclusion said, “We’d like to invite colleges, training organisations and employers that work with people with learning difficulties, people on the autistic spectrum, and people who have communication difficulties after suffering a stroke, to get in touch with us if they’d like to help us shape the future of our innovative product.”

In Phase 2, Gamelab will use its share of the funding to build new apps. These include the following: an app designed for autistic people, being able to track progress in learning signs, video demonstrations of unfamiliar signs, educational games of varying difficulty and an app for creating sign dictionaries quickly and cheaply.