Why Was Sign Abandoned?

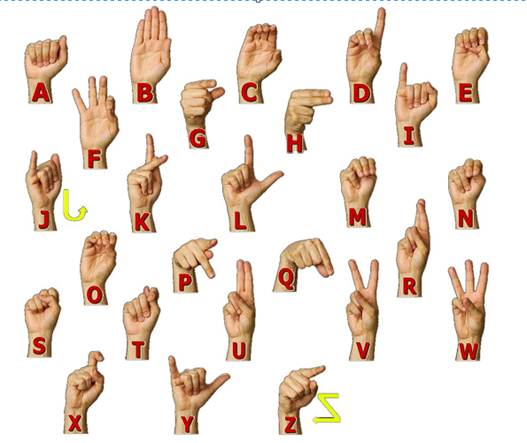

The core of the Oralists’ argument is that sign is not known to the general public, so it is of limited use and segregates the deaf. This argument would fall apart if you could translate your signs instantly.

Oralism was declared a failure in 1964. Sign has seen a revival since then, and there are around 100,000 people fluent in British Sign Language (BSL).

British Sign Language (BSL)

Deaf people have already benefited from technology. The first sign language was invented in 530AD by Benedictine monks to get round their vows of silence. In 1964, the teletypewriter was invented so the deaf could talk on the phone through typing. 1972 saw the first TV captions. The advent of texts and email has removed some of the communication barriers between the deaf and hearing worlds. YouTube now adds closed captions to its videos.

Oliver Sacks, author of Seeing Voices, noted that the deaf are abandoning deaf clubs because of email, meaning they no longer practice sign as much – a worrying development. This may no longer be a problem thanks to free video calling via Facetime or Skype. The deaf will be able to have sign conversations in real time and will not need as much bandwidth, because they don’t need audio. They will also be able to send texts by signing, though obviously they would have to use a dual-camera phone.

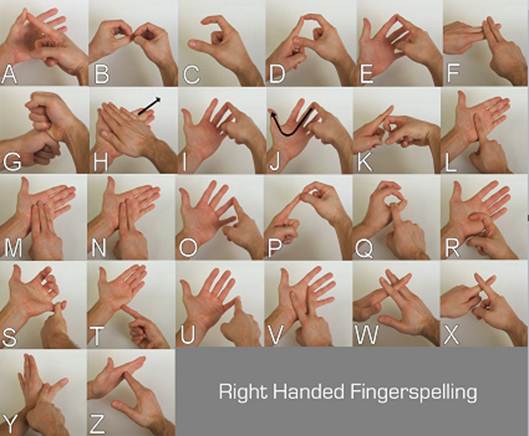

Could sign interpreters be made redundant by apps? Khalid Ashraf, a BSL on-screen presenter for ITV, is sceptical: “To our knowledge, it is completely impossible for computers to translate a gesture into text, considering the complexities of certain non-manual features, etc. Please note that deaf people do not just use hands for their communication but also use their facial expressions and body movements as part of conversation. Imagine how a spoken English language using double meanings would translate into French or other foreign languages.” He believes the only solution is for BSL to be made compulsory in school.

American Sign Language (ASL)

Interactive Solutions is a technology company that has been translating American Sign Language (ASL) for 13 years. The company explained its beginnings: “In October 1999, Interactive Solutions was approached by a family whose son was profoundly deaf. The young man, Morgan Greene, was 16 years old and a sophomore in high school. Though Morgan had a full-time sign language interpreter, he still had a fourth grade literacy level in tenth grade. He had requested that his parents help him find a company to design and develop a computer that would enable him to communicate with the hearing world when a sign language interpreter was not available.”

At the time, there was no speech-to-sign software. It had created its own by March 2000, which could translate speech to text and sign language in real time. It could also translate speech or text into a computer voice transmitted to hearing aids and cochlear implants, though this feature is of no use to those born deaf. In March 2005, it bought iCommunicator, now in its fifth version, and built a database of 30,000 words and 9,000 signs. What it could not do was translate signs into speech or text.

Interactive Solutions is explicitly not after translators’ jobs: “The iCommunicator is not intended to replace sign language interpreters, but to serve as an alternative access technology for some persons who communicate in sign language. The iCommunicator is a fully integrated system that consists of a high-end laptop computer, iCommunicator software, a wireless microphone system and peripherals, and underlying software programs.”

The company expects its software to be used for emergencies, in mainstream schools, random encounters and by families learning ASL.

In May 2008, there was another attempt to automate sign translation. HandTalk is a glove with five embedded Flex sensors, which translate gestures into a raw signal, which is converted to digital data, via Bluetooth to a mobile. The mobile runs Java 2 Micro Edition (J2ME), an app that converts the data to text then speech. It was built by engineering students at Carnegie Mellon University using very cheap components.

David Sarji, the head of the project explained how their software works: “An algorithm on the BlueSentry module converts the analogue data received from the sensors to digital data, in the form of numbers ranging from 0 to 65500. The HandTalk MIDIet in turn divides this data into segments that correspond to specific ranges of numeric values representing finger positions such as fully extended, fully bent, or partially bent… The team ‘trained’ HandTalk to recognise symbols by recording sensor data while a researcher held the glove in various sign positions, correlating these with specific signs, and mapping them to a database.”

This method has a disadvantage: it requires the purchase of a new gadget, whereas the PSLT and uKinect are apps running on commonly used hardware – consoles, smartphones and tablets. In the future, embedded computing may become the norm. Bluetooth ear pieces are common, so why not gloves?