This section covers topics — often considered to be outside the scope of a database professional — that are fundamental to the way that Windows manages memory and the applications running on it, including SQL Server. Understanding this information is a great differentiator among database professionals and it will give you the right foundation of knowledge to understand how all applications work with Windows.

1. Physical Memory

When the term physical memory is used, it’s usually in relation to RAM (random access memory), but it actually also includes the system page file. RAM is also referred to as primary storage, main memory, or system memory because it’s directly addressable by the CPU. It is regarded as the fastest type of storage you can use, but it’s volatile, meaning you lose what was stored when you reboot the computer. It’s also expensive and limited in capacity compared to nonvolatile storage such as a hard disk.

For example, Windows Server 2012 supports up to 4TB of RAM, but buying a server with that much memory will cost you millions of U.S. dollars, whereas it’s possible to buy a single 4TB hard disk for a few hundred dollars. Combine a few of those and you can have tens of TBs of very cost-effective storage space. Consequently, servers use a combination of hard disks to store data, which is then loaded into RAM where it can be worked with much faster.

By way of comparison, throughput for RAM modules is measured in gigabytes per second (GB/s) with nanosecond (ns) response times, whereas hard disk throughput is measured in megabytes per second (MB/s) with millisecond (ms) response times. Even solid-state storage technology, which is much faster than traditional disk, is typically still measured in MB/s throughput and with microsecond (µs) latency.

2. Maximum Supported Physical Memory

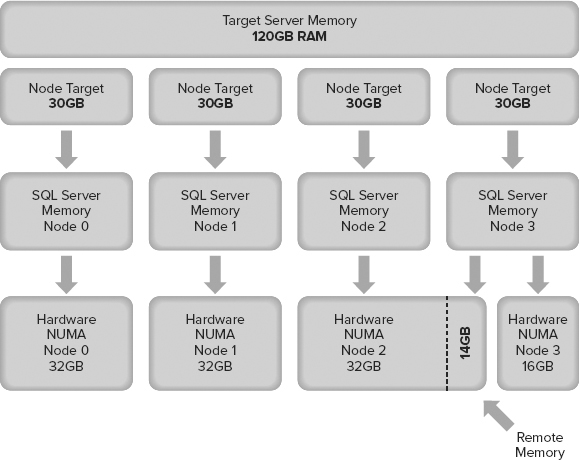

For ease of reference, Table 1 shows the maximum usable RAM for SQL Server 2012 by feature and edition.

TABLE 1: SQL Server 2012 Usable Memory by Edition and Feature

SQL Server 2012 Enterprise Edition and SQL Server 2012 Business Edition support the maximum RAM of the underlying operating system, the most popular of which at the time of writing are Windows Server 2008 R2 Standard Edition, which supports 32GB, and Windows Server 2008 R2 Enterprise Edition, which supports 2TB.

Windows Server 2012, due for release at the end of 2012, supports a maximum of 4TB of RAM.

3. Virtual Memory

If all the processes running on a computer could only use addresses in physical RAM, the system would very quickly experience a bottleneck. All the processes would have to share the same range of addresses, which are limited by the amount of RAM installed in the computer. Because physical RAM is very fast to access and cannot be increased indefinitely (as just discussed in the previous section), it’s a resource that needs to be used efficiently.

Windows (and many other mainstream, modern operating systems) assigns a virtual address space (VAS) to each process. This provides a layer of abstraction between an application and physical memory so that the operating system can choose the most efficient way to use physical memory across all the processes. For example, two different processes can both use the memory address 0xFFF because it’s a virtual address and each process has its own VAS with the same address range.

Whether that address maps to physical memory or not is determined by the operating system or, more specifically (for Windows at least), the Virtual Memory Manager, which is covered in the next section.

The size of the virtual address space is determined largely by the CPU architecture. A 64-bit CPU running 64-bit software (also known as the x64 platform) is so named because it is based on an architecture that can manipulate values that are up to 64 bits in length. This means that a 64-bit memory pointer could potentially store a value between 0 and 18,446,744,073,709,551,616 to reference a memory address.

This number is so large that in memory/storage terminology it equates to 16 exabytes (EBs). You don’t come across that term very often, so to grasp the scale, here is what 16 exabytes equals when converted to more commonly used measurements:

- 16,384 petabytes (PB)

- 16,777,216 terabytes (TB)

- 17,179,869,184 gigabytes (GB)

17 billion GB of RAM, anyone?

As you can see, the theoretical memory limits of a 64-bit architecture go way beyond anything that could be used today or even in the near future, so processor manufacturers implemented a 44-bit address bus instead. This provides a virtual address space on 64-bit systems of 16TB.

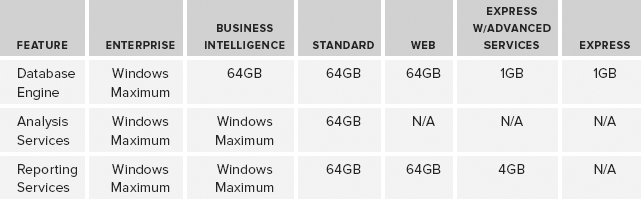

This was regarded as being more than enough address space for the foreseeable future and logically it is split into two ranges of 8TB: one for the process and one reserved for system use. These two ranges are commonly referred to as user mode and kernel mode address space and are illustrated in Figure 1. Each application process (i.e., SQL Server) can access up to 8TB of VAS, and therefore up to 8TB of RAM (depending on operating system support — remember Windows Server 2012 supports 4TB of RAM, so we’re halfway there).

FIGURE 1

3.1 Virtual Memory Manager

The Virtual Memory Manager (VMM) is the part of Windows that links together physical memory and virtual address space. When a process needs to read from or write something into memory, it references an address in its VAS; and the VMM will map it to an address in RAM. It isn’t guaranteed, however, to still be mapped to an address in RAM the next time you access it because the VMM may determine that it needs to move your data to the page file temporarily to allow another process to use the physical memory address. As part of this process, the VMM updates the VAS address and makes it invalid (it doesn’t point to an address in RAM anymore). The next time you access this address, it has to be loaded from the page file on disk, so the request is slower — this is known as a page fault and it happens automatically without you knowing.

The portion of a process’s VAS that currently maps to physical RAM is known as the working set. If a process requests data that isn’t currently in the working set, then it needs to be reloaded back into memory before use. This is called a hard page fault (a soft page fault is when the page is still on the standby list in physical memory); and to fix it, the VMM retrieves the data from the page file, finds a free page of memory, either from its list of free pages or from another process, writes the data from the page file into memory, and then maps the new page back into the process’s virtual address space.

On a system with enough RAM to give every process all the memory it needs, the VMM doesn’t have to do much other than hand out memory and clean up after a process is done with it. On a system without enough RAM to go around, the job is a little more involved. The VMM has to do some work to provide each process with the memory it needs when it needs it. It does this by using the page file to temporarily store data that a process hasn’t accessed for a while. This process is called paging, and the data is often described as having been paged out to disk.

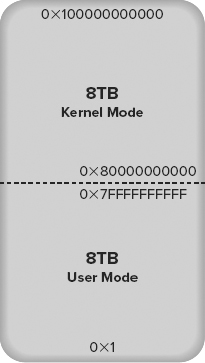

The Virtual Memory Manager keeps track of each mapping for VAS addresses using page tables, and the mapping information itself is stored in a page table entry (PTE). This is illustrated in Figure 2 using two SQL Server instances as an example. Note that the dashed arrow indicates an invalid reference that will generate a hard page fault when accessed, causing the page to be loaded from the page file.

FIGURE 2

3.2 Sizing the Page File

Determining the optimal size of a page file has been a long-running debate for years. By default, Windows will manage the size of your page file recommending a page file size of 1.5 times the size of RAM.

It won’t hurt performance to leave the default in place but the debates start to ensue when there are large amounts of RAM in a server and not enough disk space on the system drive for a full-size page file.

The primary purpose of a page file is to allow Windows to temporarily move data from RAM to disk to help it manage resources effectively. When a page file is heavily used, it indicates memory pressure; and the solution is to optimize your memory resources or buy more RAM, rather than to optimize your page file.

If you have disk space concerns on your page file drive, then setting the page file to 50% of total available RAM would be a safe bet.

At one client, where I was delivering a SQL Server Health Check, one of their servers had 96GB of RAM and a 96GB page file. Page file usage was minimal during the day, but every night a SQL Server Analysis Services cube was being rebuilt, which required so much memory that 20GB of the page file was being used during the build. This amount of page file usage is extreme but even a page file sized at 50% would have been more than enough. They upgraded the RAM to 128GB the next week.

Another argument for full-size page files is that they are required to take full memory dumps. While that is correct, it is extremely unlikely that Microsoft support will ever investigate a full memory dump because of the sheer size of it, and certainly never on the first occurrence of an issue. This then gives you time to increase the size of your page file temporarily at Microsoft’s request to gather a full dump should the need ever actually arise.

4. NUMA

Non-Uniform Memory Architecture (NUMA) is a hardware design that improves server scalability by removing motherboard bottlenecks. In a traditional architecture, every processor has access to every memory bank across a shared system bus to a central memory controller on the motherboard. This is called symmetric multiprocessing (SMP) and it has limited scalability because the shared system bus quickly becomes a bottleneck when you start to increase the number of processors.

In a NUMA system, each processor has its own memory controller and a direct connection to a dedicated bank of RAM, which is referred to as local memory, and together they’re represented as a NUMA node.

A NUMA node can access memory belonging to another NUMA node but this incurs additional overhead and therefore latency — this is known as remote memory.

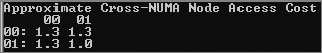

Coreinfo, a free tool from Sysinternals that can be found on the TechNet website, displays a lot of interesting information about your processor topology, including a mapping of the access cost for remote memory, by processor. Figure 3 shows a screenshot from a NUMA system with two nodes, indicating the approximate cost of accessing remote memory as 1.3 times that of local — although latency in the tests can produce outlying results as you can see in the figure. 00 to 00 is actually local and should report a cost of 1.0.

FIGURE 3

SQL Server’s Use of NUMA

SQL Server creates its own internal nodes on startup that map directly on to NUMA nodes, so you can query SQL Server directly and get a representation of the physical design of your motherboard in terms of the number processors, NUMA nodes, and memory distribution.

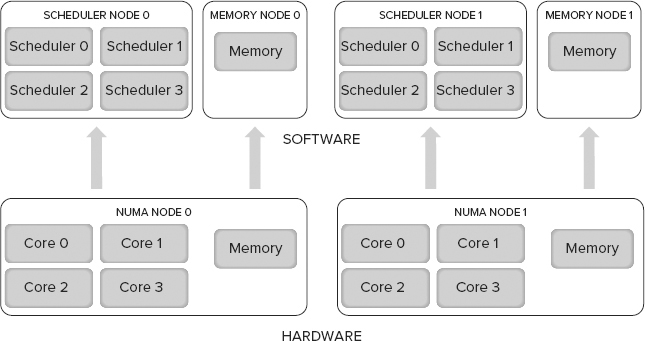

For example, Figure 4 shows a representation of a server with two processors, each with four cores and a bank of local memory that makes up a NUMA node. When SQL Server starts, the SQLOS identifies the number of logical processors and creates a scheduler for each one in an internal node .

FIGURE 4

The memory node is separate from the scheduler node, not grouped together as it is at the hardware level. This provides a greater degree of flexibility and independence; it was a design decision to overcome memory management limitations in earlier versions of Windows.

SQL Server NUMA CPU Configuration

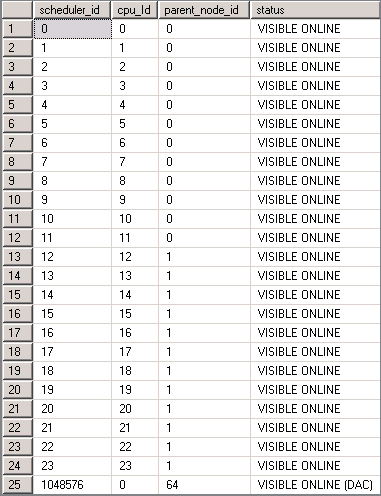

You can view information about the NUMA configuration in SQL Server using several DMVs. Figure 5 shows results from sys.dm_os_schedulers on a server with 24 logical processors and two NUMA nodes. The parent_node_id column shows the distribution of schedulers and CPU references across the two NUMA nodes. You can also see a separate scheduler for the dedicated administrator connection (DAC), which isn’t NUMA aware.

FIGURE 5

The sys.dm_os_nodes DMV also returns information about CPU distribution, containing a node_id column and a cpu_affinity_mask column, which when converted from decimal to binary provides a visual representation of CPU distribution across nodes. A system with 24 logical processors and two NUMA nodes would look like the following:

node_id dec-to-bin CPU mask

0 000000000000111111111111

1 111111111111000000000000When SQL Server starts, it also writes this information to the Error Log, which you can see for the same server in Figure 6.

FIGURE 6

![]()

SQL Server NUMA Memory Configuration

SQL Server memory nodes map directly onto NUMA nodes at the hardware level, so you can’t do anything to change the distribution of memory across nodes.

SQL Server is aware of the NUMA configuration of the server on which it’s running, and its objective is to reduce the need for remote memory access. As a result, the memory objects created when a task is running are created within the same NUMA node as the task whenever it’s efficient to do so. For example, if you execute a simple query and it’s assigned a thread on scheduler 0 in node 0, then SQL Server will try to use the memory in node 0 for all new memory requirements to keep it local.

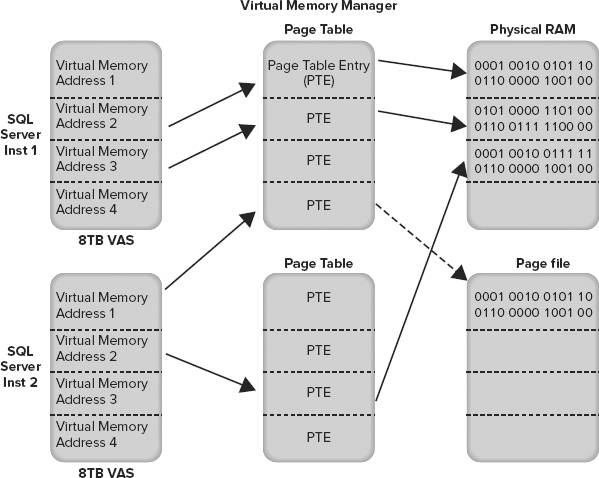

How much memory SQL Server tries to use in each hardware NUMA node is determined by the target server’s memory, which is affected by the max server memory option .

Whether you configure Max Server Memory or not, SQL Server will set a target server memory, which represents the target for SQL Server memory usage. This target is then divided by the number of NUMA nodes detected to set a target for each node.

If your server doesn’t have an even distribution of RAM across the hardware NUMA nodes on your motherboard, you could find yourself in a situation in which you need to use remote memory just to meet SQL Server’s target memory. Figure 7 illustrates this; the target server memory of node 3 cannot be fulfilled with local memory because the RAM has not been evenly distributed across NUMA nodes on the motherboard.

FIGURE 7